Non-determinism is a superpower

Over the last couple of weeks I wrote a new tool that has me really excited.

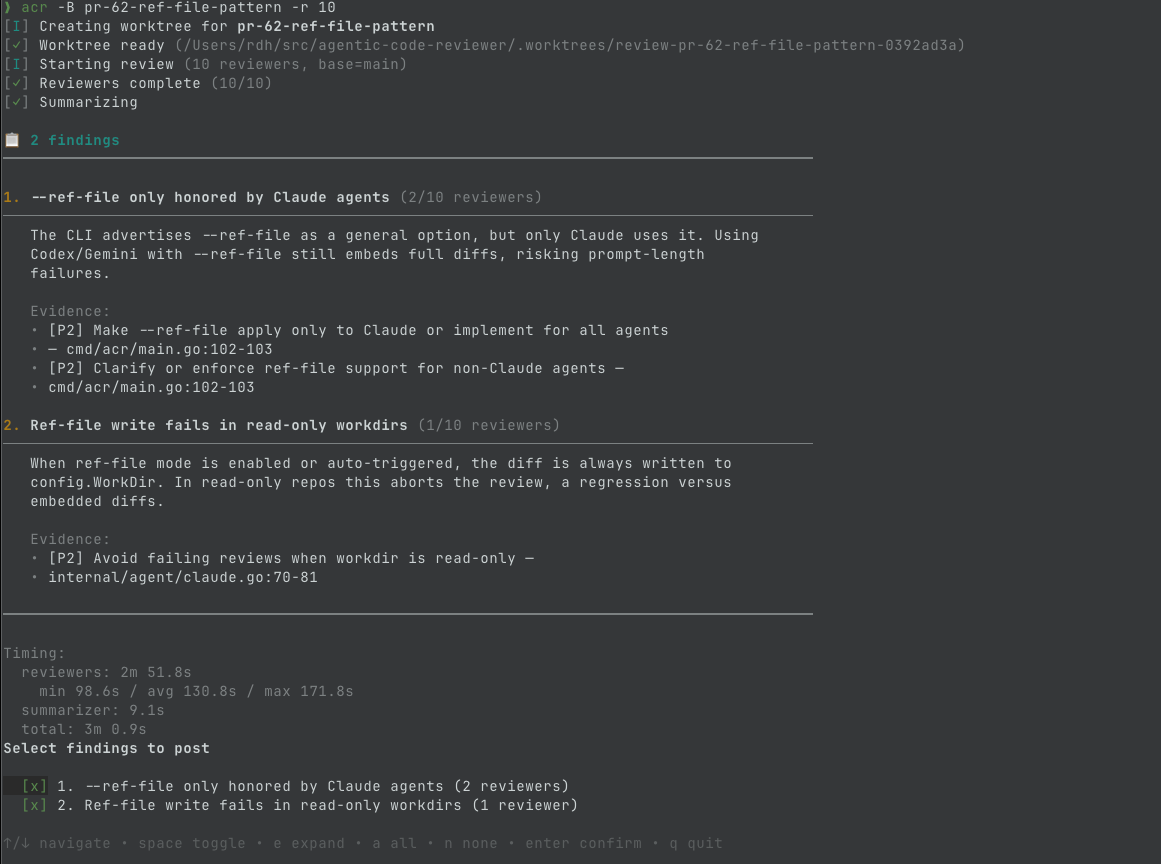

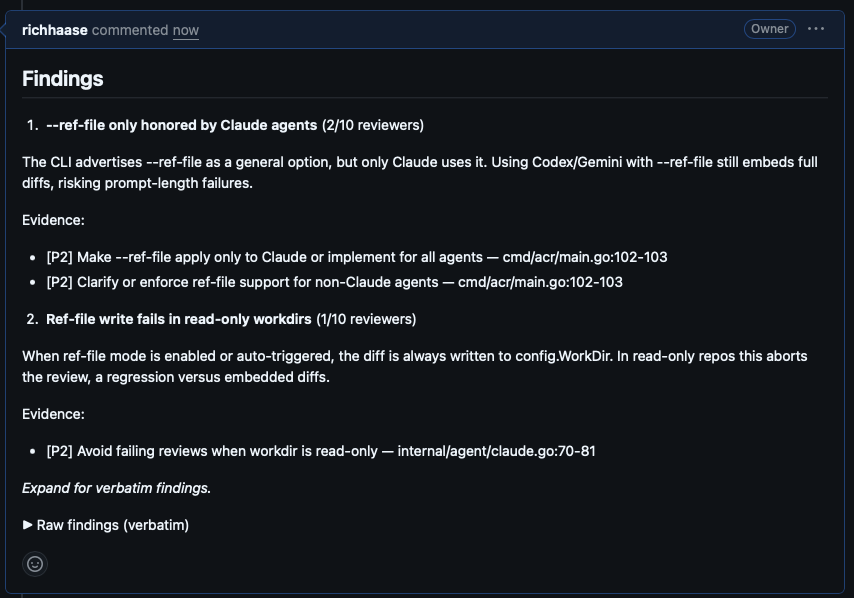

The tool is called agentic-code-reviewer or ACR. It does exactly what it sounds like, it uses AI coding agents to perform code reviews. If this sounds completely underwhelming to you that’s fair. I haven’t told you the good part yet. The good part is that ACR launches multiple parallel reviewers, then aggregates and summarizes the unique changes with confidence scores based on the number of reviewers who called out that particular issue. ACR also automates posting review findings to PRs, which is handy.

Why did I build ACR?

Initially, I was just trying to save myself time with ACR. With AI agents writing all the syntax we produce a lot more code, and I’ve been spending a lot more time reviewing code. So, naturally as the lazy programmer I was raised to be, I started thinking about repetitive tasks I could automate.

I started by thinking about how much time I was spending before looking at a PR just running codex review to collect its automated review comments which are quite good in most cases. It was tedious, and I don’t like tedious tasks, but I was doing it because I noticed that if I ran codex review enough times I tend to find real bugs, even edge cases that might bite me in the future. It was worth the time both at work and in my personal projects. But I’m lazy, and I found myself losing track of how many reviews I’d run on a PR, or worse I’d forget about running reviews entirely, and get distracted with other tasks. My process was effective but inefficient. This was particularly painful on my personal projects where I often don’t have other humans available to review my code, or if I do, they are volunteering their time and I want to be respectful of that kindness, which is how my little review script became a critical tool for me in my OSS projects.

Automated code reviews

For a week or two I used this little script of mine, and it saved me a ton of effort. I would launch the script then come back in a half hour to detailed reviews from my cadre of reviewers, which was great, but it created a new problem.

Codex review output can be dense, and with 5 reviewers you might end up with two pages of dense findings to read through. And even worse, the review findings often overlap with different line numbers and slightly different wording, so I was now spending my time de-duplicating outputs so I could post helpful findings to a PR for further review.

So, I extended the script and added a summarizer agent that grouped findings, and generated a nice looking report I could paste into PRs. This whole pasting reports lasted about a day before I decided that I didn’t want to be bothered with that either, so I added the ability for ACR to post code review findings directly to github.

Somewhere during this process it clicked for me why multiple parallel reviewers were better than a single reviewer: because the LLMs are non-deterministic.

Non-determinism is good?

Non-determinism seems bad. People are losing their minds over AI not being deterministic and so how can we trust them?! I started realizing while building ACR as a simple script, then re-writing it in Go after some interested coworkers got a peek at it, that non-determinism can be a superpower.

ACR shows that more reviewers find more issues and produce better code reviews because the agents are non-deterministic, and they have been given the same goal. If the agents were all deterministic they would all produce the same results and multiple runs of the reviews would be a pure waste of tokens, but LLMs are probabilistic, so not only do you get better reviews with more tries, but you can establish a confidence/importance level to any review finding based on the number of reviewers that called out a given issue.

Are you starting to get ideas? I was.

The main idea that came up for me is this: “What if the best system designs can be evolved rather than designed?”

Bear with me for a second, here’s my thinking:

- Traditionally, software was expensive and hard to build, so naturally we treated the software as a prized possession, something to be cared for, maintained, and enhanced over the years.

- The traditional way of doing things was predicated on high cost of production.

- If the first statement no longer holds true, then neither does the second.

The alternative to carefully crafting software seems to be convergence.

Rather than designing and spec-ing a system down to the nuts and bolts an alternative I have been exploring is to loosely define an idea, then let multiple parallel agents build the full solution with no opportunity to ask questions. Using a selector agent to review the solutions and pick/synthesize the best result can produce some amazingly good results.

Convergence as design

ACR is a simple case for the kind of convergent software building I am thinking about. Convergence is a single pass, not multiple generations. Synthesis is always the result since we always care about what every reviewer has found. I can imagine a more complex system that uses multiple iterations of parallel runs to build a complete system, and perhaps even learn as it goes with some occasional human steering.

I’m exploring this concept more. As inference costs drop I imagine that being able to say “build me a widget”, and then getting 5 working widgets to choose from, represents the kind of virtuous feedback loop that was dreamed of in the agile manifesto (and subsequently crushed by the agile industrial complex). And more importantly, humans are great at imagination, and not nearly as good at clearly defining the things we imagine. But I don’t know anyone who can’t tell me what they like/don’t like when they see it.

It’s an exciting time to work in software. I hope you all are having as much fun learning as I am.